Abstract

Background

Cancer patients often experience treatment-related symptoms which, if uncontrolled, may require emergency department admission. We developed models identifying breast or genitourinary cancer patients at the risk of attending emergency department (ED) within 30-days and demonstrated the development, validation, and proactive approach to in-production monitoring of an artificial intelligence-based predictive model during a 3-month simulated deployment at a cancer hospital in the United States.

Methods

We used routinely-collected electronic health record data to develop our predictive models. We evaluated models including a variational autoencoder k-nearest neighbors algorithm (VAE-kNN) and model behaviors with a sample containing 84,138 observations from 28,369 patients. We assessed the model during a 77-day production period exposure to live data using a proactively monitoring process with predefined metrics.

Results

Performance of the VAE-kNN algorithm is exceptional (Area under the receiver-operating characteristics, AUC = 0.80) and remains stable across demographic and disease groups over the production period (AUC 0.74–0.82). We can detect issues in data feeds using our monitoring process to create immediate insights into future model performance.

Conclusions

Our algorithm demonstrates exceptional performance at predicting risk of 30-day ED visits. We confirm that model outputs are equitable and stable over time using a proactive monitoring approach.

Plain language summary

Patients with cancer often need to visit the hospital emergency department (ED), for example due to treatment side effects. Predicting these visits might help us to better manage the treatment of patients who are at risk. Here, we develop a computer-based tool to identify patients with cancer who are at risk of an unplanned ED visit within 30 days. We use health record data from over 28,000 patients who had visited a single cancer hospital in the US to create and test the model. The model performed well and was consistent across different demographic and disease groups. We monitor model behavior over time and show that it is stable. The approach we take to monitoring model performance may be a particularly useful contribution toward implementing similar predictive models in the clinic and checking that they are performing as intended.

Similar content being viewed by others

Introduction

Modern cancer therapies are designed to eliminate disease and prevent recurrence. To this end, treatments including chemotherapy, immunotherapy, radiation, and surgery have proven to be largely effective with year-on-year reductions in mortality for most cancer types1. However, therapies are associated with negative effects that can range from mild to life threatening and may persist long after cancer treatment has been completed.

During therapy, patients with cancer often experience treatment-induced symptoms including fever, infection, pain, dehydration, neutropenia, and sepsis which may require urgent care in the emergency department (ED)2. Visits to the ED are common and between 41% and 64% of ED visits could be potentially avoidable as they are related to poorly-controlled disease or treatment-related symptoms such as fever, dehydration, and pain2.

Better outpatient management of these symptoms can reduce the risk of ED visits and therefore unplanned ED visits could be reduced by accurate estimation and feedback of risk3,4,5. Correctly identifying patients at high risk of ED admission could facilitate timely therapies to reduce symptom burden and, if necessary, alterations to cancer therapy regimens. Increasing availability of patient care data via the electronic health record (EHR) as well as the proliferation of prediction tools have created an opportunity to guide individual risk assessment to provide timely management of treatment-related symptoms and to reduce the incidence of ED visits.

In recent years, studies have used a combination of EHR data and machine learning to predict ED admission within 7–180 days for cancer patients receiving diverse therapies6,7,8,9,10,11,12,13,14. Those studies have demonstrated moderate-to-good prediction performance measured using the area under the receiver-operator characteristic curve (AUC) of 0.62–0.75 on hold-out testing datasets. To our knowledge, no previous study in this area has evaluated or described a process for evaluating model performance once deployed in production, an important consideration for ML models.

Deploying ML models to perform live predictions must be done thoughtfully due to the complexity of the data which inform the model. Machine learning models are reliant on data and future changes in data quality, distribution, or definition may adversely affect model validity. Electronic health record data, which provides detailed patient histories and descriptions of patient interactions across health systems, may be especially sensitive to temporal variations in data distribution and quality as well as changing definitions across platforms. Because future data may differ, it is not sufficient to validate and ‘freeze’ machine learning models in time; evaluations of ML model performance must be made throughout the model lifespan15.

Processes for real-time monitoring of system behavior combined with automated response is required for ongoing ML operations are well understood and implemented throughout the tech industry but are not currently well represented in the literature relating to AI-based clinical decision-making tools16. One challenge in assessing the ongoing validity of deployed AI-based clinical decision-making tools is that popular methods which compare predictions to ground truths (e.g., AUC) require the prediction timeframe (e.g., 30 days; 5 years) to have elapsed before ground truths can be known and model performance assessed.

The primary aim of this research was to develop a robust, reliable, and explainable model to predict 30-day ED admission while demonstrating a novel process for evaluating model behavior, including ongoing assessments of data quality and model performance, during a 3-month model deployment period in which the model was exposed to live data but outputs were not fed back to clinical staff.

We were able to produce a model with exceptional performance when predicting risk of 30-day ED risk on a hold-out testing data set. Our proactive 77-day in-production monitoring period confirms the stability of the algorithm with regards to its performance across demographic and disease groups.

Methods

We recruited patients from the breast and genitourinary medical oncology at University of Texas MD Anderson Cancer Center, a specialist cancer center in the United States of America. Ethical approval was provided by the MD Anderson Cancer Center Institutional Review Board (IRB). Informed consent was waived by the IRB due to the planned retrospective analyses of routinely-collected clinical data. We used this data to train a ML model to predict whether a patient would visit the ED within 30 days.

Featurization pipeline

We developed a scalable featurization pipeline which could consistently transform EHR data into usable features. Features were derived primarily through the creation of semantic classes expressed through standard clinical terminologies. The classes were then applied to EHR data, largely Health Level 7 (HL7) standard Fast Healthcare Interoperability Resources (FHIR) resources, collected from Epic Systems. We calculated social determinants of health (SDoH) data using the definitions outlined in the United States Health and Human Services (HHS) Healthy People 2020 using both zip code & census tract data17.

Dataset (model training and testing)

We utilized data collected from patients attending GU and breast medical oncology departments at the study site. We aggregated data between April 1990 and April 2022 and created a featurization pipeline to continuously ingest and prepare future data for model predictions and evaluation. To ensure that our featurization process was scalable across institutions we exported data from the EHR in compliance with the 21st Century Cures Act and the United States Core Data for Interoperability (USCDI) standards18,19. Our dataset consisted of 184,138 observations from 28,369 patients. A new observation was defined every time a new lab value, vital sign, or staging result was detected in the EHR. The data were split into training and test samples using a 4:1 ratio. We ensured that no individual patient had observations in both training and testing sets.

We included a total of 222 features derived from 99 patient data elements, covering demongraphic, vital sign, laboratory, SDoH, comorbidity and cancer condition (cancer type and clinical and pathologic staging) data, that were chosen through exploratory analysis and feedback from clinical intelligence and informatics experts. We provided the description and distributions of the data elements included for model training in Supplementary Data 1 and 2. Categorical features were transformed using one-hot encoding and numeric features were either bucketized or min-max scaled. The featurization strategy automatically handled missingness by setting all relevant bucket columns or one-hot encoded columns to zero. Machine learning models were trained to predict whether a patient would visit the emergency department (ED) within 30 days following an observation. Further featurization details are presented in Supplementary Methods.

Model training and testing

We developed a variational autoencoder K-nearest neighbor (VAE-kNN) algorithm20. The latent feature vector from the VAE was fine-tuned with contrastive learning21,22,23. We chose this model due to both its high performance in predictive tasks as well as the intuitive way in which model outputs can be understood by describing the neighborhood that was used to make the prediction.

The VAE-kNN model was evaluated against a logistic regression with elastic net penalty (LR)24, random forest (RF)25, multinomial Naive Bayes (MNB)26, kNN (without VAE encoding)27, and gradient boosting machine (GBM) model27. We used Bayesian optimization with threefold cross-validation to tune the model hyperparameters using AUC as the performance criterion. Output probabilities were assessed the AUC and Brier score28,29. Further information relating to the VAE-kNN algorithm is given in Supplementary Methods.

Model interpretability

An essential component of ED risk assessments is the ability to explain predictions so that clinicians can intervene prior to unplanned ED admission. We used Shapley additive explanations (SHAP) to assign each feature an impact value for the entire training set as well as for individual predictions17.

Simulated model deployment

Following successful model training and validation we put our validated production model into a simulated deployment lasting 77 days where it was exposed to live data, but model outputs were not fed back to clinical teams. The purpose of this simulated deployment was to observe model behavior in a real-world setting. We break down our approach into three principal elements to ensure reliable, reproducible, and safe delivery of ML predictions in practice. An overview of our strategy to monitor model health is shown in Fig. 1.

For a model to be deployed and remain in production, it must pass a series of internal checks. First, we expect that the overall performance of the model (measured by AUC) will not regress beyond a limit of 0.10 points. We also inspect the performance across several demographics (birth sex, race, ethnicity, and cancer type) to ensure that it follows the 4/5th rule (each demographic subgroup is within 20% of all others). Model performance, both overall and for different demographic or clinical groups, must remain within 0.10 AUC points of the originally validated model to remain in production. Models that violate any performance standards for 2 consecutive weeks will be investigated and may be retrained.

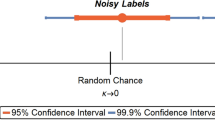

Central to our framework for continuous monitoring of model behavior and performance is the concept of lagging and leading indicators of model health (see Table 1). Lagging indicators, which include overall model prediction performance, are only available after the prediction time period (30 days in the current study) has elapsed and ground truth outcomes are known. Conversely, leading indicators are available prior to or immediately after a prediction is made.

Within this framework, a leading indicator is one that is immediately available (i.e., prior to the 30-day window to establish whether there had been an ED visit following a prediction). Leading indicators in our framework include data quality as well as the number and type of predictions made. Conversely, a lagging indicator can only be established once the prediction period has elapsed. The ability of leading indicators to signpost potential issues with model health before the ground truth is known may make them especially useful in ensuring safe and effective AI in practice.

Data drift, a leading indicator of model health, is measured using population stability index (PSI). We monitored PSI weekly during deployment as well as the volume and nature of predictions. After the true outcomes of patients were known we measured AUC overall performance as well as for the demographic subgroups on a weekly basis. Breaches in our data drift and performance metrics resulted in automated alerts notifying the Ronin team to investigate and resolve any issues that might affect model performance.

We evaluated bias during deployment using a combination of absolute and relative rules governing the model performance. If there was a 0.10 point reduction in AUC from baseline for the entire population or for any group divided by birth sex, race, ethnicity, or cancer type an alert was automatically generated. Similarly, the 4/5th rule is applied to ensure that the AUC in each demographic subgroup is within 80% of all others.

We conducted all analyses in the Python programming environment (Version 3.9) utilizing SciKit-Learn, HyperOpt; scikit-optimize; SHAP library, and TensorFlow/Keras. We reported our in accordance with the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis the (TRIPOD) statement30.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Results

Description of our sample

We assembled 184,138 observations that were collected from the Epic EHR. These records represented details of medical history and care received for 28,369 unique patients, a total of 16,000 (8.75%) observations were made within 30 days of an ED visit.

Demographic information is presented in Table 2. Most observations were taken from patients with a diagnosis of GU cancer (74,215; 50%) with 51,507, (35%) observations taken from patients with a diagnosis of breast cancer. The average age of participants in our sample was 61 (standard deviation = 13) and 53% were male. Within our sample, 28,307 observations were made from patients who had attended appointments at either the GU or breast departments but did not have a recorded diagnosis of breast or GU cancer.

Featurization

Details of the features developed using our scalable featurization pipeline including details of bucketization are presented in Supplementary Methods and Supplementary Data 1, 2, and 3.

Model development and training

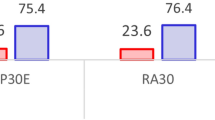

All the models we tested performed well on the testing dataset (AUC range 0.76–0.80; see Supplementary Table 1) and were well calibrated (Brier Score range 0.07–0.13). Our preferred VAE-kNN algorithm was, alongside the gradient-boosted machines, the strongest performing algorithm (see Fig. 2a and b). The VAE-kNN performed equitably across all sensitive groups (Fig. 2c).

Model interpretability

Interpretability visualizations are provided in Fig. 3. Figure 3a displays the mean SHAP value for the top 20 features used by the VAE-kNN. We present two separate use cases relating to an ED visit within 30 days; Fig. 3b, c and no ED visit within 30 days; Fig. 3d, e. Figure 3b, d present a visual confirmation of the proportion of patients in the VAE-kNN prediction neighborhood who experienced an ED visit. Figure 3c, e present SHAP values for the individual patient the prediction was made for.

a Overall feature/value importance. b, c High risk example patient. d, e Low risk example patient. b/d Predicted risk displayed as an easy-to-understand pictogram. c/e Top 10 feature/value contributions for each example patient. ED: emergency department, SHAP: Shapely addictive explanations. Med: Medicine, CO2: Carbon dioxide, PTT: Partial thrombastin time, PT: Prothrombin time, PSA: Prostate specific antigen, HCT: Hematocrit, INR: International normalized ratio, LDH: Low-density lipoprotein, AST: Aspartate aminotransferase, ANC: Neutrophil count, WBC: White blood count, HGB: Hemoglobin, Pct: Percentage. Source data is in Supplementary Data 4. Error bars represent 95% confidence interval.

Data health monitoring

Our weekly assessments of model health throughout the 77-day production assessment period indicated acceptable PSI (Fig. 4b) for most weeks. We were able to detect a 2-week period in which prediction volume was far lower due to issues with the application processing interface (API) connection to the EHR. This issue was resultingly resolved using the system shown in Fig. 1.

Weekly metrics used to assess data health and model performance. Leading metrics: a Volume of predictions. b PSI value for all features. c Average predicted risk. Lagging metrics: d Percent of actual ED visits. e, f AUC and Brier scores. The drop in volume and spike in PSI was due to an EHR data feed outage and is described in the Discussion section. PSI: population stability index. Source data is in Supplementary Data 4. Error bars represent 95% confidence interval, N for analysis shown in (b–f) are given in (a).

Performance and fairness monitoring

We monitored lagging indicators of model health as soon as the ground truth information was available within the EHR to determine if the patient had gone to the ED or not. Table 3 displays average performance scores over the deployment period. Figure 4d demonstrates that there were fewer ED visits for patients in the production period than were represented in the training datasets. The reduced number of visits to the ED may have been a driving factor in our discrimination performance dropping slightly, on average, across the production assessment period (Fig. 4e). The calibration of our model was maintained, compared to testing, across the production period (Fig. 4f).

Discussion

In this paper we demonstrate cutting edge performance in the task of predicting 30-day ED use for cancer patients while providing a novel method for communicating model outputs to clinicians. We introduce the concept of leading and lagging indicators and demonstrate how model health can be constantly monitored before the 30-day prediction period has elapsed and ground truth data becomes available. As far as we are aware, this is the first example of a model which has been developed, deployed, and subjected to an extensive period of comprehensive data quality, model behavior, and bias monitoring.

We demonstrate that our algorithm performs at a greater or equal level to other published oncology prediction models developed using the EHR to predict unplanned ED use in either general oncology populations or specific cancer types or treatments6,7,8,9,10,11,12,13,14. Uniquely, we are able to demonstrate the consistency of this performance on live data during our production implementation. In addition, we introduce the concept of leading indicators of model health as tools to generate rapid diagnostic to support longer prediction timeframe and safety of the delivered predictions12.

At the core of our work was the desire to make an algorithm that demonstrated good performance during development and validation, but which also was able to maintain that performance during production where it was monitored in live deployment at an academic medical center in the United States. Consistent with our expectations, overall model performance did not drop-off during production.

Maintaining model performance is dependent on maintaining data and code quality. These three dependent concepts are a requirement for delivering high quality models. During the weeks of May 16th and May 23rd, 2022 we noticed that the number of predictions dropped sharply, as did our average predicted risk. Conversely, our data drift monitor spiked. We note that this issue would likely not have been noticed if we were monitoring for model performance alone. An investigation found that data quality had dropped due to outages in EHR data feeds that the presented ED risk model relies upon. Our model was receiving incomplete data for the predictions performed during this time span. Using the data health monitors in place and collaboration between the data teams at both institutions, the issues were quickly resolved, which is reflected in our data health metrics returning to their expected ranges.

Our process for monitoring data quality proved useful in this study where our AI system was put into production, but predictions were not fed back to providers. The importance of model behavior and data quality monitoring will increase when the predictions are fed back to clinicians as part of a decision support system. In such a system, we expect that the number of high-risk patients that are admitted to the ED will decrease as effective clinical interventions are deployed as a result of the model predictions. The expected decrease in true positive rate will make it impossible to monitor model performance by relying only on lagging indicators of prediction quality such as AUC, as other studies have done14.

Further research is required to assess the impact of the model upon clinical decision making and subsequent improvements in patient outcomes resulting from the model. New techniques to interpret leading indicators of model health will allow greater insight into how changes in data distributions and model behavior around the time of prediction might impact model performance without having to rely on lagging indicators which may not be useful in the case of an in-production model. In addition, another key component of an effective ML model is the communication of its outputs to the target audience. In this work, we used a kNN algorithm which has unique properties to offer ways of model output communications to clinicians and patients. To maintain this paper in a manageable length, we focused this paper on the performance of the models and our ability to monitor their behavior over time. Another paper describing how to leverage the properties to design the model output feedback system with the involvement of end-users, including clinicians, nurses, and oncologists, is under preparation.

We acknowledge some limitations in our work; we were able to produce a model which was found to be suitable for use at two departments within a single large academic cancer center in the United States. We attempted to deploy informatics processes which could be standardized across sites using similar data sources in the United States, but were unable to assess whether our featurization, development, and validation pipelines could create acceptable models at other institutions or for other disease sites. In addition, studies have shown that other forms of data which are not necessarily linked to the EHR, such as patient-reported outcomes and radiation parameters, may provide a strong signal and improve the performance of prediction models and their inclusion may well have improved the performance of our model for this task.

In concusion, we present a strategy for ensuring that ML models can be develop, deployed, and proactively monitored to ensure consistent unbiased performance during the model lifetime, as far as we are aware, our approach is novel in the healthcare setting. Our model that displayed state-of-the-art performance on the prediction task and maintained the equivalent performance across demographic groups when exposed to live data over a period of 3 months. Future work should be conducted to estimate the performance of this pipeline in other settings as well as to create new strategies to identify threats to model performance via leading indicators of model health and behavior.

Data availability

All data relating to the study are presented in the paper and supplementary materials. Patient data used to create, validate, and monitor our models are not publicly available due to ethical concerns. It may be possible to make data available as part of a future academic collaboration requiring separate institutional data collaboration agreements and additional IRB approval. Please contact Dr. C Gibbons to discuss. Source data for the figures are available as Supplementary Data 4.

Code availability

Analysis code can be accessed from the following repository:31 https://zenodo.org/record/7888547 (https://doi.org/10.5281/zenodo.7888547)

References

Islami, F. et al. Annual Report to the Nation on the Status of Cancer, Part 1: National Cancer Statistics. J. Natl Cancer Institute 113, 1648–1669 (2021).

Panattoni, L. et al. Characterizing potentially preventable cancer- and chronic disease?related emergency department use in the year after treatment initiation: A regional study. J. Oncol. Pract. 14, e176–e185 (2018).

Mayer, D. K., Travers, D., Wyss, A., Leak, A. & Waller, A. Why Do Patients With Cancer Visit Emergency Departments? Results of a 2008 Population Study in North Carolina. J. Clin. Oncol. 29, 2683 (2011).

Harrison, J. M. et al. Toxicity-related factors associated with use of services among community oncology patients. J. Oncol. Pract. 12, e818–e827 (2016).

Handley, N. R., Schuchter, L. M. & Bekelman, J. E. Best Practices for Reducing Unplanned Acute Care for Patients With Cancer. 14, 306–313 https://doi.org/10.1200/JOP.17.00081 (2018).

Xie, F. et al. Development and validation of an interpretable machine learning scoring tool for estimating time to emergency readmissions. EClinicalMedicine 45, 101315 (2022).

Leonard, G. et al. Machine Learning Improves Prediction Over Logistic Regression on Resected Colon Cancer Patients. J. Surg. Res. 275, 181–193 (2022).

Rodriguez-Brazzarola, P. et al. Predicting the risk of VISIT emergency department (ED) in lung cancer patients using machine learning. 38, 2042–2042 https://doi.org/10.1200/JCO.2020.38.15_suppl.2042 (2020).

Mayo, C. et al. Anticipating Poor Outcomes: A Prognostic Machine Learning Model of Unplanned Visits to the Emergency Department for Patients Undergoing Treatment for Head and Neck Cancer Using Comprehensive Multi-Factor Electronic Health Records. Int. J. Radiat. Oncology*Biology*Phys. 111, S64–S65 (2021).

Sutradhar, R. & Barbera, L. Comparing an Artificial Neural Network to Logistic Regression for Predicting ED Visit Risk Among Patients With Cancer: A Population-Based Cohort Study. J. Pain Symptom Manage 60, 1–9 (2020).

Bolourani, S. et al. Using machine learning to predict early readmission following esophagectomy. J. Thorac. Cardiovasc. Surg. 161, 1926–1939.e8 (2021).

Hong, J. C., Niedzwiecki, D., Palta, M. & Tenenbaum, J. D. Predicting Emergency Visits and Hospital Admissions During Radiation and Chemoradiation: An Internally Validated Pretreatment Machine Learning Algorithm. JCO Clin. Cancer Inform. 1–11 https://doi.org/10.1200/cci.18.00037 (2018).

Peterson, D. J., Ostberg, N. P., Blayney, D. W., Brooks, J. D. & Hernandez-Boussard, T. Machine Learning Applied to Electronic Health Records: Identification of Chemotherapy Patients at High Risk for Preventable Emergency Department Visits and Hospital Admissions. JCO Clin. Cancer Inform. 1106–1126 https://doi.org/10.1200/cci.21.00116 (2021).

Coombs, L. et al. A machine learning framework supporting prospective clinical decisions applied to risk prediction in oncology. npj Digit. Med. 2022 5:1 5, 1–9 (2022).

The White House. Blueprint for an AI Bill of Rights. https://www.whitehouse.gov/ostp/ai-bill-of-rights/.

Sculley, D. et al. Hidden Technical Debt in Machine Learning Systems. Adv. Neural Inf. Proc. Syst. 28, (2015).

Nohara, Y., Matsumoto, K., Soejima, H. & Nakashima, N. Explanation of machine learning models using shapley additive explanation and application for real data in hospital. Comput. Methods Programs Biomed. 214, 106584 (2022).

Office of the National Coordinator for Health Information Technology (ONC), Department of Health and Human Services. “United States Core Data for Interoperability (USCDI).” (2018).

Hudson, K. L. & Collins, F. S. The 21st Century Cures Act — A View from the NIH. N Engl J. Med. 376, 111–113 (2017).

Kingma, D. P. & Welling, M. Auto-Encoding Variational Bayes. 2nd International Conference on Learning Representations, ICLR 2014 - Conference Track Proceedings https://doi.org/10.48550/arxiv.1312.6114 (2013).

van den Oord DeepMind, A., Li DeepMind, Y. & Vinyals DeepMind, O. Representation Learning with Contrastive Predictive Coding. https://doi.org/10.48550/arxiv.1807.03748 (2018).

Khosla, P. et al. Supervised Contrastive Learning. (2020) https://doi.org/10.48550/arxiv.2004.11362.

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. 37th Int. Confer. Mach. Learn. ICML 2020 PartF168147-3, 1575–1585 (2020).

Zou, H. & Hastie, T. Regularization and variable selection via the elastic net. J. R Stat. Soc. Series B Stat. Methodol. 67, 301–320 (2005).

Breiman, L. Random Forests. Mach. Learn 45, 5–32 (2001).

Pearl, J. Probabilistic reasoning in intelligent systems: networks of plausible inference (Morgan kaufmann series in representation and reasoning). (Morgan Kaufmann Publishers, San Mateo, Calif., 1988).

Hastie, T. & Robert Tibshirani, A. Discriminant Adaptive Nearest Neighbor Classification and Regression. Adv. Neural Inf. Proc. Syst. 8, (1995).

Böken, B. On the appropriateness of Platt scaling in classifier calibration. Inf. Syst. 95, 101641 (2021).

Blattenberger, G. & Lad, F. Separating the brier score into calibration and refinement components: A graphical exposition. Am. Statis. 39, 26–32 (1985).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. M. Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD): the TRIPOD Statement. Br. J. Surg. 102, 148–158 (2015).

benatronin. projectronin/ds-ed-risk-publication: Publication analysis code. (2023) https://doi.org/10.5281/ZENODO.7888547 (2023).

Acknowledgements

Hao Sun and Kurt Weber for generating value sets required for featurization. Natalie Jeha and Michelle Abramowski for contributing to feature selection and output vetting. Max Kaufmann and Neehar Mukne for contributing NLP models to identify ED visits from EHR notes.

Author information

Authors and Affiliations

Contributions

R.D.G. and C.L.S. conceptualized and managed the study. A.G. and A.W. developed featurization software. S.W., M.A., and K.B. provided clinical expertize and developed value sets for medical codes. R.D.G., B.H.E., A.G., and A.W. performed model training and validation, SHAP computations and analysis, and developed monitoring software. R.D.G., B.H.E., and L.K. performed analysis of results. C.L.S., R.D.G., and B.H.E. co-authored the paper and generated graphics and figures. C.S.G. and S.C.L. provided clinical expertize and co-authored the paper. C.S.G. and S.C.L. performed literature review.

Corresponding author

Ethics declarations

Competing interests

The authors declare the following competing interests: R.G., B.E., A.W., A.G., S.W., M.A., K.B., L.K., and C.S. all have stock options in Project Ronin, who funded this research. C.S.G. has received research funding, including salary support, for Project Ronin. Other authors report no competing interests.

Peer review

Peer review information

Communications Medicine thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

George, R., Ellis, B., West, A. et al. Ensuring fair, safe, and interpretable artificial intelligence-based prediction tools in a real-world oncological setting. Commun Med 3, 88 (2023). https://doi.org/10.1038/s43856-023-00317-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s43856-023-00317-6